RDD is a fundamental data structure of Spark and it is the primary data abstraction in Apache Spark and the Spark Core. To convert DataSet or DataFrame to RDD just use rdd() method on any of these data types. Following snippet shows how we can create an RDD by loading external Dataset. > lines_rdd = sc.textFile("nasa_serverlog_20190404.tsv") Simple Example Read into RDD Spark Context The first thing a Spark program requires is a context, which interfaces with some kind of cluster to use. In general, input RDDs can be created using the following methods of the SparkContext class: parallelize, datastoreToRDD, and textFile. This is available since the beginning of the Spark.

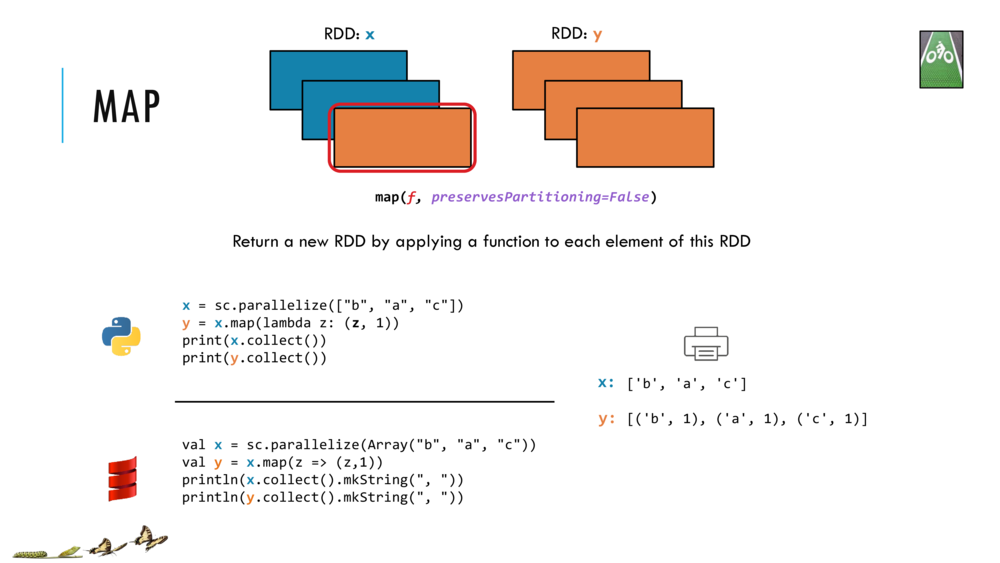

In the following example, we form a key value pair and map every string with a value of 1. createDatFrame () method of SparkSession.

parallelize () method and then convert it into a PySpark DataFrame using the. To create a PySpark DataFrame from an existing RDD, we will first create an RDD using the. Each instance of an RDD has at least two methods corresponding to the Map-Reduce workflow: map. Spark SQL, which is a Spark module for structured data processing, provides a programming abstraction called DataFrames and can also act as a distributed SQL query engine. First, we will provide you with a holistic view of all of them in one place. There are three ways to create a DataFrame in Spark by hand: 1. The term 'resilient' in 'Resilient Distributed Dataset' refers to the fact that a lost partition can be reconstructed automatically by Spark by recomputing it from the RDDs that it was computed from. The Spark web interface facilitates monitoring, debugging, and managing Spark. A Spark DataFrame is an integrated data structure with an easy-to-use API for simplifying distributed big data processing.

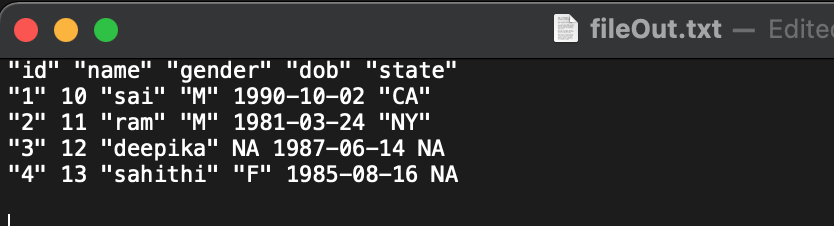

Function to clean text data in spark rdd driver#

Creating RDD Spark provides two ways to create RDDs: loading an external dataset and parallelizing a collection in your driver program. There are following ways to Create RDD in Spark. PartitionPruningRDD (Spark 3.2.0 JavaDoc) Getting started with PySpark - IBM Developer spark. For explaining RDD Creation, we are going to use a data file which is available in local file system. Convert an RDD to a DataFrame using the toDF() method.

That way, the reduced data set rather than the larger mapped data set will be returned to the user.

0 kommentar(er)

0 kommentar(er)